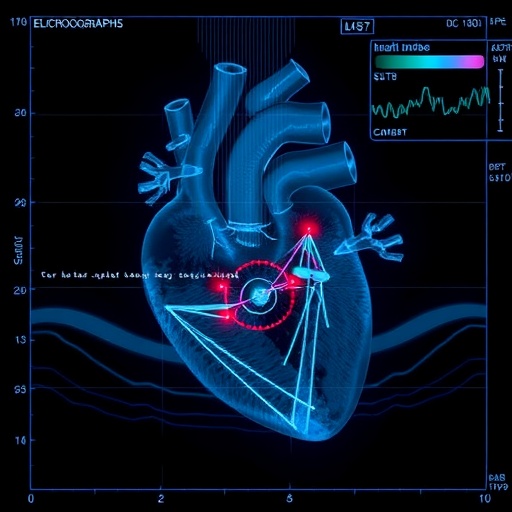

Echocardiography stands as the cornerstone of cardiac imaging, utilizing sophisticated ultrasound technology to capture dynamic video sequences of the heart. These echocardiographic videos provide invaluable insights into cardiac anatomy and function, forming the basis of clinical diagnosis and therapeutic decision-making across a broad spectrum of cardiovascular diseases. Despite the significant advancements in imaging technology, the interpretation of echocardiograms remains heavily reliant on expert human assessment, a process that is labor-intensive, time-consuming, and prone to inter-observer variability. This has spurred a growing interest in leveraging artificial intelligence (AI) to aid and augment clinical workflows within cardiology, particularly in the realm of echocardiography.

Recent attempts to integrate AI into echocardiographic analysis, although promising, have predominantly focused on narrow tasks confined to individual standard views—such as the parasternal long axis or apical four-chamber views. These single-view systems excel at specific tasks but lack the ability to holistically analyze the comprehensive data generated during a full echocardiographic examination. Such limited scope prevents these models from effectively synthesizing complementary anatomical and functional information inherent in multiple views, ultimately constraining their clinical utility and diagnostic accuracy. Recognizing these limitations, a multidisciplinary team of researchers has unveiled EchoPrime, a groundbreaking vision-language foundation model designed to fundamentally transform echocardiographic interpretation.

EchoPrime is distinguished by its capacity to process and integrate multi-view echocardiographic video data at an unprecedented scale and complexity. Trained on an extraordinary dataset exceeding 12 million paired videos and clinical text reports, the model employs a contrastive learning framework to develop a unified embedding capable of capturing subtle and complex relationships across all standard echocardiographic views. This vast, diverse dataset encompasses a wide range of both common and rare cardiac pathologies, equipping EchoPrime with a remarkable breadth of diagnostic capability that transcends traditional AI models.

At the heart of EchoPrime’s architecture lies its innovative view-informed anatomic attention module. This component harnesses precise view-classification algorithms to assign dynamic weights to the embeddings derived from individual video inputs, ensuring that the specific anatomical structures visible in each view are prioritized and contextualized appropriately. Such a mechanism allows the model to respect the clinical nuances associated with each echocardiographic angle, enabling a granular and anatomically coherent synthesis of cardiac imaging data that mirrors expert human interpretation more closely than ever before.

A true leap forward in the field is realized through EchoPrime’s implementation of retrieval-augmented interpretation. This feature integrates the comprehensive information gleaned from multiple echocardiographic videos, unifying them into a cohesive clinical assessment. The model effectively aggregates disparate data points, enabling holistic interpretation across form and function—a task that previously demanded extensive manual synthesis by skilled cardiologists. EchoPrime therefore promises to reduce diagnostic turnaround times significantly while enhancing the reproducibility and accuracy of preliminary clinical assessments.

The testing and validation of EchoPrime spanned five internationally diverse healthcare systems, capturing heterogeneous data reflective of varied demographic, technological, and clinical environments. Against this challenging backdrop, EchoPrime demonstrated state-of-the-art performance across 23 distinct benchmarking tasks related to cardiac form and function, outclassing both specialized task-specific models and prior foundation models that failed to harness the power of multi-view integration. These benchmarks included measurements traditionally considered challenging for AI, such as subtle wall motion abnormalities and complex valvular pathologies, illustrating the model’s robustness and clinical readiness.

Clinically, the implications of EchoPrime are profound. Cardiologists burdened by ever-increasing volumes of echocardiographic studies stand to benefit from an AI-powered assistant capable of delivering automated preliminary assessments with accuracy rivaling experienced human readers. This augmentation does not replace clinician expertise but rather empowers decision-making by flagging critical abnormalities and streamlining workflow efficiencies. Furthermore, EchoPrime’s nuanced embedding of anatomical structures and diagnostic vocabulary anchors its outputs in clinically interpretable terms, a crucial factor for user trust and adoption.

Beyond immediate diagnostic support, EchoPrime’s foundational design lays the groundwork for a new generation of AI tools capable of advancing cardiovascular research and personalized medicine. By encoding echocardiographic videos in a shared semantic space informed by multimodal data, researchers can now explore phenotypic variability and disease trajectories at unprecedented resolution. This capability not only facilitates earlier detection of subtle cardiac dysfunction but also enables the discovery of novel imaging biomarkers, potentially accelerating drug development and therapeutic innovations.

The development of EchoPrime underscores the critical importance of interdisciplinary collaboration, combining deep expertise in cardiology, machine learning, computer vision, and natural language processing. Such integrated approaches are pivotal in overcoming the entrenched challenges posed by complex medical imaging data, bridging the gap between raw data acquisition and actionable clinical insights. The project exemplifies a paradigm shift from task-specific AI towards versatile foundation models capable of addressing the multifaceted nature of medical diagnostics holistically.

Ethical and practical considerations in deploying EchoPrime have been diligently addressed. Comprehensive validation across multiple independent datasets ensures generalizability and minimizes biases related to population heterogeneity or imaging equipment variability. The model’s interpretability features, including localized attention maps and clear linkage to anatomical structures, support transparency and facilitate clinical auditability. Importantly, EchoPrime has undergone rigorous clinical evaluation, reaffirming its efficacy as a decision-support tool rather than a standalone diagnostic entity, thus aligning with prevailing regulatory frameworks and clinical practice guidelines.

Looking forward, EchoPrime sets a new benchmark for artificial intelligence applications in echocardiography and cardiac imaging at large. Its success heralds broader adoption of multi-view, multimodal foundation models across other imaging modalities, potentially transforming diagnostic workflows in radiology, pathology, and beyond. Ultimately, EchoPrime’s ability to streamline echocardiographic interpretation carries the promise of improving patient outcomes through more timely, accurate, and comprehensive cardiovascular assessments, signaling a transformative leap in heart health management.

As the cardiology community embraces this new AI frontier, the evolution of EchoPrime will undoubtedly continue. Future enhancements may incorporate real-time feedback during image acquisition, integration with electronic health records for longitudinal patient monitoring, and expansion to address congenital heart disease and other specialized cardiac conditions. The fusion of high-dimensional medical imaging with sophisticated AI not only expands the horizons of diagnostic precision but also lays a resilient foundation for the future of personalized, data-driven cardiovascular care.

In summation, EchoPrime revolutionizes echocardiographic evaluation by bringing an unparalleled level of integration, scale, and clinical sophistication to AI-assisted diagnosis. It bridges the critical gap between multi-view video data and comprehensive cardiac interpretation, outperforming prior models and demonstrating readiness for real-world clinical deployment. This innovation represents a compelling convergence of technology and medicine, signaling a new era where AI enhances human expertise to elevate the standard of cardiovascular diagnostics globally.

Subject of Research: Artificial Intelligence in Echocardiography and Multimodal Cardiac Imaging

Article Title: Comprehensive echocardiogram evaluation with view primed vision language AI

Article References:

Vukadinovic, M., Chiu, IM., Tang, X. et al. Comprehensive echocardiogram evaluation with view primed vision language AI. Nature (2025). https://doi.org/10.1038/s41586-025-09850-x

Image Credits: AI Generated

Tags: advanced cardiac diagnostic toolsAI in echocardiographyartificial intelligence in cardiac imagingcomprehensive echocardiographic examinationechocardiogram analysis technologyEchoPrime model for echocardiographyenhancing clinical workflows with AIimproving diagnostic accuracy in cardiologyintegrating AI for holistic analysislimitations of echocardiographic interpretationmultidisciplinary research in cardiologyreducing inter-observer variability in echocardiograms