In a groundbreaking breakthrough that promises to revolutionize prostate cancer diagnosis, researchers have unveiled an AI system that mimics the diagnostic acumen of seasoned pathologists while providing clear, interpretable insights into its decision-making process. This innovative technology addresses the long-standing challenge in medical AI: combining superhuman accuracy with explainability, a crucial aspect for trust and integration in clinical workflows.

Prostate cancer, a leading cause of cancer-related morbidity in men worldwide, demands precise diagnostic staging to guide effective treatment. The Gleason grading system, developed over half a century ago, remains the gold standard for assessing tumor aggressiveness by examining prostate tissue histology. However, the grading process is notoriously complex and subject to inter-pathologist variability, sometimes leading to inconsistent treatment plans. The newly developed AI promises to streamline and standardize Gleason grading, reducing subjective discrepancies that have historically impeded consistent patient management.

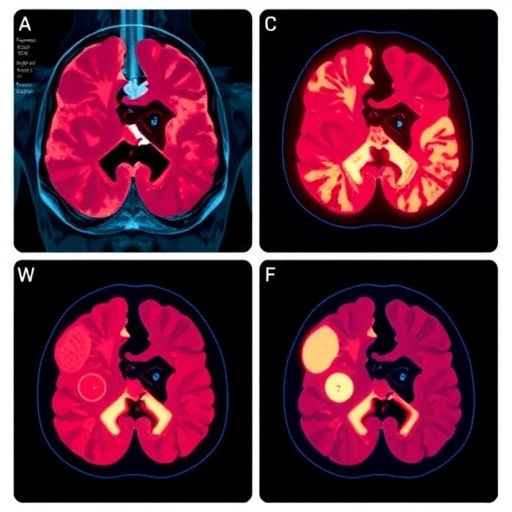

The heart of this development lies in an explainable AI model trained on thousands of digitized prostate biopsy images annotated by expert pathologists. Unlike many “black box” algorithms, which deliver predictions without rationale, this system offers transparent, pathologist-like explanations by highlighting key morphological features within tissue samples that informed its Gleason score assignment. Visual overlays and textual justifications accompany each prediction, effectively bridging the interpretability gap and fostering confidence among clinicians.

Deep neural networks optimized with novel architectures specific to histopathological pattern recognition underpin the model’s performance. By integrating multi-scale tissue analysis, the AI captures cellular and glandular structures concurrently, mimicking how human experts evaluate biopsies. This multi-modal approach ensures granular detail and broad context are both considered, which is essential for accurate Gleason grading. The rigorous training regimen involved iterative fine-tuning against diverse datasets from multiple centers, enhancing the model’s robustness to variations in staining protocols and scanner artifacts.

One of the study’s most remarkable achievements is the AI’s ability to explain its grading process in a hierarchical manner akin to human reasoning. The system identifies primary and secondary patterns within tissue sections, assigns grades accordingly, and computes the composite Gleason score just as a pathologist would. This feature not only aids in diagnosis but also serves educational purposes, offering medical trainees a novel tool to understand complex tissue pathology with guided, AI-assisted annotations.

The implications of this technology extend beyond diagnostics. It holds potential to accelerate the typically time-consuming review processes in pathology labs. By pre-analyzing slides and flagging areas of concern with interpretative reasoning, pathologists can prioritize cases and allocate their expertise more efficiently. Moreover, this AI-driven triage could significantly reduce diagnostic turnaround times, thereby hastening treatment decisions and improving patient outcomes.

Crucially, the system’s explainability attributes address growing regulatory and ethical demands for transparency in AI-driven healthcare. Regulatory bodies increasingly require models to not only perform accurately but to elucidate their decision-making processes, allowing scrutiny and validation. This AI’s clear, evidence-based explanations satisfy these constraints, potentially smoothing its path to clinical deployment and widespread adoption.

The researchers also emphasize the AI’s role in reducing diagnostic disparities, particularly in resource-limited settings where expert pathologists may be scarce. By acting as a reliable and interpretable digital assistant, the system can augment local healthcare capabilities, democratizing access to high-quality prostate cancer grading. This could have profound global health impacts, especially in underserved regions facing escalating prostate cancer burdens.

Technical validation of the AI system demonstrated that it matches or exceeds human expert-level accuracy in multiple blinded trials. Detailed analysis showed excellent concordance between AI-generated Gleason scores and those assigned by pathologists across different institutions. Of particular note was the AI’s performance on challenging borderline cases, where inter-observer variability typically peaks. Here, the system’s interpretative feedback served as a valuable second opinion, guiding consensus building.

Integration with existing pathology workflows is seamless due to the system’s compatibility with standard digital slide scanners and laboratory information systems. This plug-and-play design promises minimal disruption to clinical operations while maximizing potential benefits. Additionally, the platform supports continuous learning, allowing it to evolve with new data and adapt to emerging pathological classification schemes or staining technologies.

The potential to extend this pathologist-like explainable AI beyond prostate cancer is vast. Similar frameworks may be adapted for grading other cancers where histological assessments are pivotal, such as breast, lung, or colorectal carcinomas. This model establishes a blueprint for marrying AI precision and transparency in diverse diagnostic domains, ultimately elevating the standard of patient care.

In essence, this explainable AI represents a marriage of cutting-edge machine learning with the nuanced expertise of clinical pathologists, delivering an unprecedented tool in cancer diagnostics. By maintaining interpretability without compromising accuracy, it tackles one of the most stubborn obstacles in medical AI and sets a bold new standard for future technology-driven healthcare innovations.

The study’s success hinges on the interdisciplinary collaboration between computer scientists, pathologists, and clinical researchers, reflecting the necessity of cross-domain partnerships in modern medical AI development. Such synergy ensures that technological advancements align with genuine clinical needs and can be safely and effectively translated into patient care.

Looking forward, ongoing research will focus on clinical trials integrating this AI tool in live diagnostic workflows to assess its real-world impact and acceptance. Feedback from practicing pathologists will be invaluable in refining user interfaces and explanatory mechanisms to align with day-to-day clinical practice better.

Ultimately, the introduction of pathologist-like explainable AI for Gleason grading signifies a pivotal moment in precision oncology, enabling more reliable, accessible, and transparent cancer diagnosis. As this technology advances and proliferates, it is poised to transform the landscape of pathology, enhancing the accuracy and efficiency of cancer grading while empowering clinicians with unprecedented insight into complex diagnostic decisions.

Subject of Research: Prostate cancer grading using explainable artificial intelligence models.

Article Title: Pathologist-like explainable AI for interpretable Gleason grading in prostate cancer.

Article References:

Mittmann, G., Laiouar-Pedari, S., Mehrtens, H.A. et al. Pathologist-like explainable AI for interpretable Gleason grading in prostate cancer. Nat Commun 16, 8959 (2025). https://doi.org/10.1038/s41467-025-64712-4

Image Credits: AI Generated

Tags: accuracy in cancer gradingAI in prostate cancer diagnosisAI mimicking human pathologistsdigital pathology advancementsenhancing trust in medical AIexplainable artificial intelligence in healthcareGleason grading system for prostate cancerimproving patient management in oncologyinterpretability in machine learningprostate biopsy image analysisreducing variability in cancer diagnosticsstandardizing cancer treatment protocols