In the rapidly evolving landscape of medical diagnostics, the intersection of artificial intelligence and imaging technologies is revolutionizing how clinicians detect and manage cancer. A groundbreaking study published in Nature Communications in 2025 by Shen, Yang, Sun, and colleagues marks a significant stride in this arena by introducing an explainable multimodal deep learning framework designed to predict lateral lymph node metastasis (LLNM) in thyroid cancer patients using ultrasound imaging. This advancement holds promise for transforming the current diagnostic protocols and treatment planning, enhancing prognosis accuracy while reducing unnecessary surgical interventions.

Thyroid cancer represents one of the most common endocrine malignancies globally, with an increasing incidence rate observed over the past few decades. Lateral lymph node metastasis is a critical factor influencing patient outcomes, often associated with disease recurrence and potential mortality. Despite its clinical significance, preoperative identification of LLNM remains a formidable challenge due to the complex anatomy of cervical lymph nodes and the limitations inherent in conventional imaging techniques.

Ultrasound imaging, owing to its non-invasiveness, cost-effectiveness, and accessibility, has been the primary modality for evaluating thyroid nodules and regional lymph nodes. However, its diagnostic accuracy heavily relies on the operator’s expertise and is subject to interpretation variability. To overcome these shortcomings, Shen and colleagues have leveraged the power of deep learning—a subset of artificial intelligence capable of discerning intricate patterns in large datasets—to create a predictive model that integrates multimodal ultrasound information.

.adsslot_wU8j014Wo9{ width:728px !important; height:90px !important; }

@media (max-width:1199px) { .adsslot_wU8j014Wo9{ width:468px !important; height:60px !important; } }

@media (max-width:767px) { .adsslot_wU8j014Wo9{ width:320px !important; height:50px !important; } }

ADVERTISEMENT

The core innovation lies in the explainability of the model. While previous AI tools have demonstrated impressive predictive capabilities, their “black box” nature has hindered clinical trust and widespread adoption. In this research, the authors constructed a framework that not only yields high performance in predicting LLNM but also provides transparent explanations for its decisions. This interpretability is achieved using attention mechanisms and visualization techniques that highlight which ultrasound features contribute most significantly to the prediction, facilitating clinician confidence and cross-verification.

Methodologically, the study capitalizes on a vast collection of ultrasound images from thyroid cancer patients who underwent lateral neck dissection. These images encompass both gray-scale and Doppler ultrasound modalities, capturing structural and vascular characteristics of lymph nodes. The deep learning architecture comprises convolutional neural networks (CNNs) configured to process multimodal inputs, subsequently fused to produce a comprehensive risk stratification output.

In addition to image data, clinical variables such as patient demographics and tumor characteristics were incorporated to enrich the model’s contextual understanding. Such multimodal integration addresses the multifactorial nature of metastasis and elevates predictive precision beyond what isolated data sources could achieve individually. This synergy highlights the sophistication of the model and its alignment with real-world clinical decision-making processes.

Validation of the model entailed rigorous statistical analyses, including cross-validation on internal cohorts and testing on external patient groups to ensure robustness and generalizability. The results revealed that the explainable deep learning framework outperformed conventional ultrasound assessment by achieving significantly higher sensitivity, specificity, and overall accuracy in predicting LLNM. These metrics underscore the model’s potential to become an indispensable tool in preoperative evaluations.

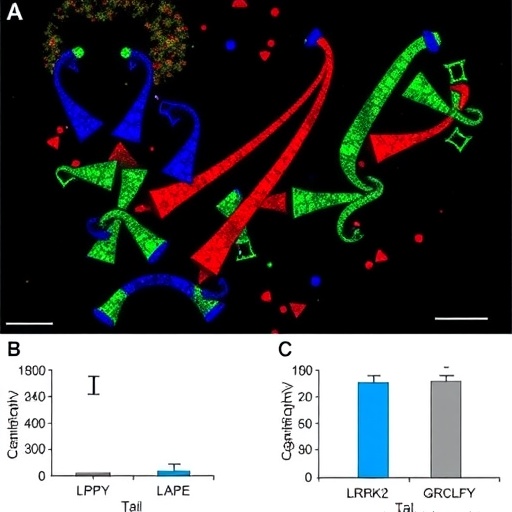

Notably, the researchers delved into the explainability aspect by employing heatmaps and saliency maps generated from the network’s attention layers. These visual explanations illuminated specific regions within the lymph nodes and surrounding tissues that the model identified as salient contributors to metastatic likelihood. Such insights can guide radiologists and surgeons in pinpointing suspicious areas, refining biopsy strategies, and tailoring surgical planning to individual patient profiles.

The implications of this work extend beyond thyroid cancer alone. By establishing a blueprint for explainable multimodal deep learning in oncology imaging, the study sets a precedent for analogous applications across various tumor types and anatomical sites. The balance struck between high-performance AI and interpretability addresses a critical barrier to AI integration in clinical workflows—trust.

While the study presents compelling evidence, it also acknowledges inherent limitations and future directions. One notable challenge involves standardizing ultrasound image acquisition across centers to minimize variability that could affect model performance. Additionally, expanding datasets to include diverse populations and multicenter collaborations will further validate and enhance the model’s applicability.

The authors also propose integrating additional data types such as genomic profiling and serum biomarkers in forthcoming iterations to capture molecular dimensions of metastasis risk. Such multimodal fusion of imaging and molecular data epitomizes the emerging paradigm of precision oncology, where individualized treatment strategies derive from nuanced patient-specific insights.

From a technical perspective, the model’s architecture exhibits innovations in handling heterogeneous input data streams, employing advanced fusion techniques that preserve the integrity of each modality while enabling synergistic learning. This approach mitigates information loss and optimizes feature extraction, contributing to the superior predictive performance reported.

Clinically, this technology promises to reduce overtreatment and the morbidity associated with unnecessary lymph node dissections by providing more accurate metastasis risk assessments. It empowers multidisciplinary teams to make informed decisions, enhancing patient quality of life and optimizing healthcare resource utilization.

Moreover, the study addresses ethical considerations surrounding AI deployment, emphasizing the importance of transparency and clinician involvement in model interpretation. By incorporating explainability mechanisms, the authors champion responsible AI innovation aligned with patient safety and regulatory standards.

In essence, Shen and collaborators’ pioneering research epitomizes the synthesis of cutting-edge AI methodologies with clinical exigencies to tackle a pressing oncological challenge. It embodies the potential of explainable deep learning frameworks to not only augment technical diagnostic capabilities but also bridge the gap between machine intelligence and human expertise.

As the medical community continues to grapple with the complexities of cancer diagnosis and management, innovations such as this herald a future where AI augments human judgment in a transparent and trustworthy manner. The ability to predict lateral lymph node metastasis with both high accuracy and explainability could become a cornerstone in personalized thyroid cancer care, ultimately improving patient outcomes on a global scale.

Continued exploration and refinement of such models, coupled with prospective clinical trials, will be crucial in cementing their role in standard practice. The fusion of multimodal imaging, deep learning, and explainability charts an exciting course for precision diagnostics, promising to transform medical paradigms and offer hope to patients worldwide confronting thyroid cancer.

Subject of Research: Explainable multimodal deep learning for predicting lateral lymph node metastasis in thyroid cancer using ultrasound imaging.

Article Title: Explainable multimodal deep learning for predicting thyroid cancer lateral lymph node metastasis using ultrasound imaging.

Article References:

Shen, P., Yang, Z., Sun, J. et al. Explainable multimodal deep learning for predicting thyroid cancer lateral lymph node metastasis using ultrasound imaging. Nat Commun 16, 7052 (2025). https://doi.org/10.1038/s41467-025-62042-z

Image Credits: AI Generated

Tags: advancements in cancer diagnosticsartificial intelligence in cancer managementchallenges in preoperative identification of LLNMexplainable AI in healthcareimproving prognosis accuracy in cancer treatmentlymph node metastasis in thyroid cancermultimodal deep learning in medical imagingnon-invasive imaging techniquespredict lateral lymph node metastasisreducing unnecessary surgical interventionsthyroid cancer diagnosis using ultrasoundultrasound imaging for thyroid nodules