In the rapidly evolving landscape of materials science, where data-driven methodologies increasingly dictate the pace of discovery, researchers face a formidable challenge: limited access to large-scale, high-quality experimental data. Unlike fields such as natural language processing, computer vision, or molecular biology, materials research, particularly concerning polymers and inorganic substances, suffers from data scarcity. This fundamental obstacle has spurred innovative approaches that leverage computational simulations to bridge the gap between data availability and predictive power.

Computational materials science has matured over recent decades, with efforts like first-principles calculations and molecular dynamics simulations serving as powerful tools to generate extensive datasets. These simulated data repositories encapsulate rich physical insights into atomic and molecular behavior, enabling the construction of vast computational materials databases. In the inorganic domain, pioneering initiatives such as the Materials Project, AFLOW, OQMD, GNoME, and OMat24 have amassed databases encompassing the full periodic table, providing invaluable resources for researchers worldwide. In polymer science, the Institute of Statistical Mathematics (ISM) has developed RadonPy, an automated platform for conducting comprehensive molecular dynamics simulations to predict polymer properties. This platform supports an industry-academia consortium of 47 organizations, pooling expertise and resources to create one of the largest polymer property databases available today.

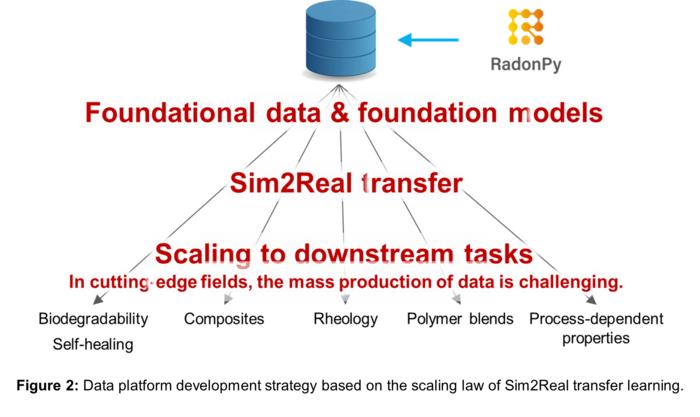

Yet, an unaddressed question persists: how effectively do these computational databases translate to real-world material properties? The emerging paradigm addressing this is Sim2Real transfer learning—borrowing machine learning terminologies, it involves pretraining predictive models on massive simulated datasets and subsequently fine-tuning them with scarce experimental data. This approach leverages the abundant computational data to precondition models, vastly improving their accuracy when applied to experimental predictions. Compared to models relying solely on experimental data, Sim2Real transfer learning has demonstrated superior performance, offering an effective remedy for data scarcity in experimental domains.

.adsslot_S1B03D6Lrm{ width:728px !important; height:90px !important; }

@media (max-width:1199px) { .adsslot_S1B03D6Lrm{ width:468px !important; height:60px !important; } }

@media (max-width:767px) { .adsslot_S1B03D6Lrm{ width:320px !important; height:50px !important; } }

ADVERTISEMENT

The hallmark of the recent study led by Prof. Kenji Fukumizu at ISM, in collaboration with Preferred Networks, lies in uncovering the existence and robustness of scaling laws in Sim2Real transfer learning across diverse materials tasks. The research validates theoretical insights that model performance on experimental property prediction improves as a power law function of the computational database size, expressed mathematically as error = Dn^(-α) + C. Here, n represents the number of computational data points, α the decay rate indicating how quickly error decreases with more data, and C the transfer gap —the irreducible error reflecting the difference between simulation and reality. Identifying and quantifying these parameters allows researchers to benchmark databases, revealing which datasets promise greater predictive gains upon expansion.

Crucially, transfer gap C serves as a practical metric indicating the upper bound of performance improvements achievable through database growth. A smaller C suggests a closer alignment between simulated and experimental domains, meaning increased simulation data will meaningfully enhance prediction accuracy. Conversely, a larger transfer gap signals inherent limitations in the simulation’s applicability, guiding resource allocation away from futility. By analyzing these parameters for databases such as RadonPy and a polymer miscibility database developed in partnership with the Materials Chemistry Consortium (MCC), the study offers rare quantitative evidence that strong scaling and transferability are not abstract concepts but measurable attributes with real-world implications.

Beyond theoretical contributions, the work reveals how scaling laws empower strategic decision-making. Estimating how much additional simulation data is needed to reach targeted accuracy levels enables efficient experimental planning, avoiding costly overproduction of computational data with diminishing returns. Moreover, when predictions plateau due to converged scaling, computational efforts can be redirected to other pressing research problems, optimizing the balance between simulation and experiment.

Significantly, the RadonPy project has translated these findings into concrete design strategies for database development, integrating quantum chemical calculations with deep learning frameworks for polymer-solvent solubility prediction. Continual data production, coupled with iterative improvements in transfer model performance, ensures that such databases remain dynamic, evolving assets rather than static repositories. This ongoing refinement allows researchers to push the envelope of predictive accuracy while maintaining computational efficiency.

The collaborative ecosystem underpinning this endeavor encompasses national institutes, academia, and industry partners, reflecting the multifaceted nature of modern materials research. Access to experimental datasets like the PoLyInfo polymer properties provided by the National Institute for Materials Science (NIMS) enriches validation processes, ensuring models do not stray into theoretical abstraction but remain anchored in empirical reality. Funding support from entities such as the Ministry of Education, Culture, Sports, Science and Technology (MEXT) and the Japan Science and Technology Agency (JST) further demonstrates national commitment to pioneering data-driven materials science.

Looking ahead, establishing universally applicable, scalable data production and analytical workflows will be paramount. Identifying source domains capable of massive, efficient data generation and effectively transferring their insights to domains with limited data availability will redefine how materials innovation proceeds. The study underscores that bridging these domains requires more than generating data; it demands a rigorous understanding of how data quantity impacts predictive performance, and where bottlenecks arise in the simulation-to-reality transfer.

In essence, this research charts a course toward a future where data scarcity no longer constrains material discovery. By systematically quantifying scaling laws in transfer learning, it transforms abstract theoretical expectations into actionable guidelines, enabling scientists to strategically harness computational databases to their fullest potential. This paradigm promises to accelerate the realization of new materials with tailored properties, advancing technologies in energy, electronics, and beyond.

As machine learning and computational physics intertwine ever more deeply, the frameworks validated in this research may become standard components of materials informatics pipelines. The demonstrated power-law scaling behavior serves not only as a performance predictor but as a compass directing the next generation of data-driven materials research. Integrated databases with strong transferability and scalability will be the cornerstone infrastructure empowering breakthroughs, facilitating a smarter, more efficient quest to understand and engineer matter at its fundamental level.

Ultimately, this study is a testament to the synergistic power of interdisciplinary collaboration, uniting statistical mathematics, computational chemistry, and machine learning to overcome one of the field’s most persistent challenges. As researchers worldwide adopt and expand upon these insights, we can anticipate a transformative shift in how materials data are generated, interpreted, and leveraged — ushering in an era of accelerated, adaptive, and precise materials innovation.

Subject of Research:

Scaling laws in Sim2Real transfer learning to improve real-world predictive models from expanding computational materials databases.

Article Title:

Scaling law of Sim2Real transfer learning in expanding computational materials databases for real-world predictions

News Publication Date:

24-May-2025

Web References:

http://dx.doi.org/10.1038/s41524-025-01606-5

References:

Jain et al., The Materials Project: A materials genome approach to accelerating materials innovation. APL Mater 1, 011002 (2013).

Curtarolo et al., AFLOW: An automatic framework for high-throughput materials discovery. Comput Mater Sci 58, 218–226 (2012).

Kirklin et al., The Open Quantum Materials Database (OQMD): assessing the accuracy of DFT formation energies. npj Comput Mater 1, 15010 (2015).

Merchant et al., Scaling deep learning for materials discovery. Nature 624, 80–85 (2023).

Barroso-Luque et al., Open materials 2024 (omat24) inorganic materials dataset and models. arXiv preprint arXiv:2410.12771 (2024).

Hayashi et al., RadonPy: automated physical property calculation using all-atom classical molecular dynamics simulations for polymer informatics. npj Comput Mater 8, 222 (2022).

Aoki et al., Multitask machine learning to predict polymer–solvent miscibility using Flory–Huggins interaction parameters. Macromolecules 56, 5446-5456 (2023).

Wu et al., Machine-learning-assisted discovery of polymers with high thermal conductivity using a molecular design algorithm. npj Comput Mater 5, 66 (2019).

Yamada et al., Predicting materials properties with little data using shotgun transfer learning. ACS Cent Sci 5, 1717-1730 (2019).

Mikami et al., A scaling law for syn2real transfer: How much is your pre-training effective? Machine Learning and Knowledge Discovery in Databases, 477–492 (2023).

Ishii et al., NIMS polymer database PoLyInfo (I): an overarching view of half a million data points. STAM-M 4, 2354649 (2024).

Image Credits:

© The Institute of Statistical Mathematics

Tags: bridging data scarcity in materials sciencechallenges in materials data accessibilitycomputational materials science innovationsdata-driven materials researchfirst-principles calculations in materialsindustry-academia collaboration in materials researchinorganic materials data repositorieslarge-scale experimental data in materialsMaterials Project and data initiativesmolecular dynamics simulations for polymerspolymer property databasesRadonPy automated simulations