In the field of digital simulation technology applications, especially in the development of autonomous driving, object detection is a crucial component. It involves the perception of objects in the surrounding environment, which provides essential information for the decision-making process and planning of intelligent systems. Traditional object detection methods typically involve steps such as feature extraction, object classification, and position regression on images. However, these methods are limited by manually designed features and the performance of classifiers, which restrict their effectiveness in complex scenes and for objects with significant variations. The advent of deep learning technology has led to the widespread adoption of object detection methods based on deep neural networks. Notably, the convolutional neural network (CNN) has emerged as one of the most prominent approaches in this field. By leveraging multiple layers of convolution and pooling operations, CNNs are capable of automatically extracting meaningful feature representations from image data.

Credit: Beijing Zhongke Journal Publising Co. Ltd.

In the field of digital simulation technology applications, especially in the development of autonomous driving, object detection is a crucial component. It involves the perception of objects in the surrounding environment, which provides essential information for the decision-making process and planning of intelligent systems. Traditional object detection methods typically involve steps such as feature extraction, object classification, and position regression on images. However, these methods are limited by manually designed features and the performance of classifiers, which restrict their effectiveness in complex scenes and for objects with significant variations. The advent of deep learning technology has led to the widespread adoption of object detection methods based on deep neural networks. Notably, the convolutional neural network (CNN) has emerged as one of the most prominent approaches in this field. By leveraging multiple layers of convolution and pooling operations, CNNs are capable of automatically extracting meaningful feature representations from image data.

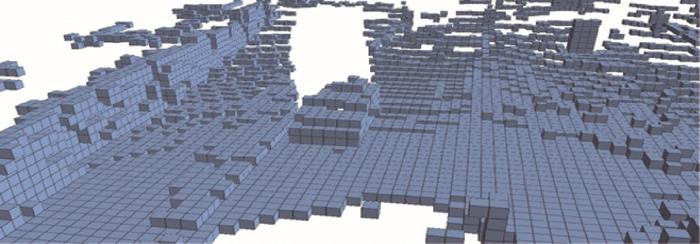

In addition to image data, Light Detection and Ranging (LiDAR) data play a crucial role in object detection tasks, particularly for 3D object detection. LiDAR data represent objects through a set of unordered and discrete points on their surfaces. Accurately detecting point cloud clusters representing objects and providing their pose estimation from these unordered points is a challenging task. LiDAR data, with their unique characteristics, offer high-precision obstacle detection and distance measurement, which contributes to the perception of surrounding roadways, vehicles, and pedestrian targets.

In real-world autonomous driving and related environmental perception scenarios, using a single modality often presents numerous challenges. For instance, while image data can provide a wide variety of high-resolution visual information such as color, texture, and shape, it is susceptible to lighting conditions. In addition, models may struggle to handle occlusions caused by objects obstructing the view due to inherent limitations in camera perspectives. Fortunately, LiDAR exhibits exceptional performance in challenging lighting conditions and excels at accurately spatially locating objects in diverse and harsh weather scenarios. However, it possesses certain limitations. Specifically, the low resolution of LiDAR input data results in sparse point cloud when detecting distant targets. Extracting semantic information from LiDAR data is also more challenging than that from image data. Thus, an increasing number of researchers are emphasizing multimodal environmental object detection.

A robust multimodal perception algorithm can offer richer feature information, enhanced adaptability to diverse environments, and improved detection accuracy. Such capabilities empower the perception system to deliver reliable results across various environmental conditions. Certainly, multimodal object detection algorithms also face certain limitations and pressing challenges that require immediate attention. One challenge is the difficulty in data annotation. Annotating point cloud and image data is relatively complex and time consuming, particularly for large-scale datasets. Moreover, accurately labeling point cloud data is challenging due to their sparsity and the presence of noisy points. Addressing these issues is crucial for further advancements in multimodal object detection. Moreover, the data structure and feature representation of point cloud and image data, as two distinct perception modalities, differ significantly. The current research focus lies in effectively integrating the information from the two modalities and extracting accurate and comprehensive features that can be utilized effectively. Furthermore, processing large-scale point cloud data are equally challenging. Point cloud data typically encompass a substantial number of 3D coordinates, which necessitates greater demands on computing resources and algorithmic efficiency compared with pure image data.

See the article:

Jia Mingda, Yang Jinming, Meng Weiliang, Guo Jianwei, Zhang Jiguang, Zhang Xiaopeng. 2024. Survey on the fusion of point clouds and images for environmental object detection. Journal of Image and Graphics, 29(06):1765-1784[DOI: 10.11834/jig.240030]

https://doi.org/10.11834/jig.240030

Journal

Journal of Image and Graphics

DOI

10.11834/jig.240030

Article Title

Survey on the fusion of point clouds and images for environmental object detection

Article Publication Date

19-Jun-2024