Holographic imaging has always been challenged by unpredictable distortions in dynamic environments. Traditional deep learning methods often struggle to adapt to diverse scenes due to their reliance on specific data conditions.

Credit: X. Tong et al., doi 10.1117/1.AP.5.6.066003.

Holographic imaging has always been challenged by unpredictable distortions in dynamic environments. Traditional deep learning methods often struggle to adapt to diverse scenes due to their reliance on specific data conditions.

To tackle this problem, researchers at Zhejiang University delved into the intersection of optics and deep learning, uncovering the key role of physical priors in ensuring the alignment of data and pre-trained models. They explored the impact of spatial coherence and turbulence on holographic imaging and proposed an innovative method, TWC-Swin, to restore high-quality holographic images in the presence of these disturbances. Their research is reported in the Gold Open Access journal Advanced Photonics.

Spatial coherence is a measure of how orderly light waves behave. When light waves are chaotic, holographic images become blurry and noisy, as they carry less information. Maintaining spatial coherence is crucial for clear holographic imaging.

Dynamic environments, like those with oceanic or atmospheric turbulence, introduce variations in the refractive index of the medium. This disrupts the phase correlation of light waves and distorts spatial coherence. Consequently, the holographic image may become blurred, distorted, or even lost.

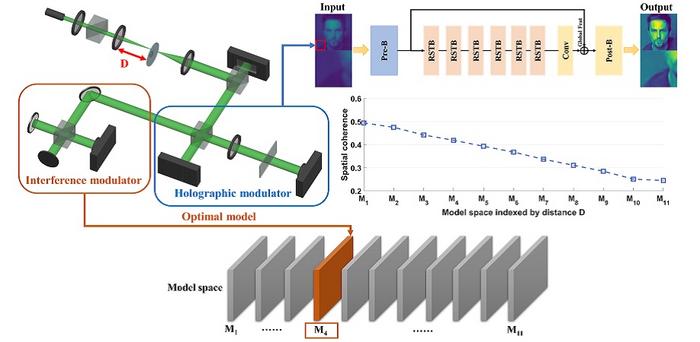

The researchers at Zhejiang University developed the TWC-Swin method to address these challenges. TWC-Swin, short for “train-with-coherence swin transformer,” leverages spatial coherence as a physical prior to guide the training of a deep neural network. This network, based on the Swin transformer architecture, excels at capturing both local and global image features.

To test their method, the authors designed a light processing system that produced holographic images with varying spatial coherence and turbulence conditions. These holograms were based on natural objects, serving as training and testing data for the neural network. The results demonstrate that TWC-Swin effectively restores holographic images even under low spatial coherence and arbitrary turbulence, surpassing traditional convolutional network-based methods. Furthermore, the method reportedly exhibits strong generalization capabilities, extending its application to unseen scenes not included in the training data.

This research breaks new ground in addressing image degradation in holographic imaging across diverse scenes. By integrating physical principles into deep learning, the study sheds light on a successful synergy between optics and computer science. As the future unfolds, this work paves the way for enhanced holographic imaging, empowering us to see clearly through the turbulence.

For details, read the original article by X. Tong et al., “Harnessing the magic of light: spatial coherence instructed swin transformer for universal holographic imaging,” Adv. Photon. 5(6) 056003 (2023), doi 10.1117/1.AP.5.6.066003.

Journal

Advanced Photonics

DOI

10.1117/1.AP.5.6.066003

Article Title

Harnessing the magic of light: spatial coherence instructed swin transformer for universal holographic imaging

Article Publication Date

25-Oct-2023