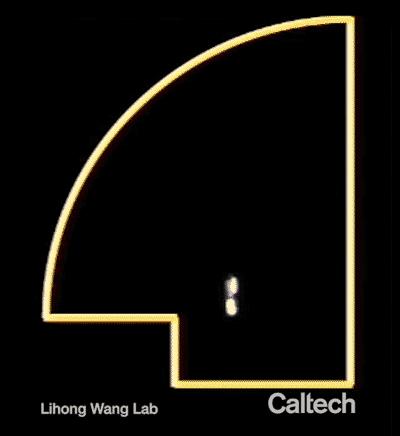

Credit: Caltech

There are things in life that can be predicted reasonably well. The tides rise and fall. The moon waxes and wanes. A billiard ball bounces around a table according to orderly geometry.

And then there are things that defy easy prediction: The hurricane that changes direction without warning. The splashing of water in a fountain. The graceful disorder of branches growing from a tree.

These phenomena and others like them can be described as chaotic systems, and are notable for exhibiting behavior that is predictable at first, but grows increasingly random with time.

Because of the large role that chaotic systems play in the world around us, scientists and mathematicians have long sought to better understand them. Now, Caltech’s Lihong Wang, the Bren Professor in the Andrew and Peggy Cherng department of Medical Engineering, has developed a new tool that might help in this quest.

In the latest issue of Science Advances, Wang describes how he has used an ultrafast camera of his own design that recorded video at one billion frames per second to observe the movement of laser light in a chamber specially designed to induce chaotic reflections.

“Some cavities are non-chaotic, so the path the light takes is predictable,” Wang says. But in the current work, he and his colleagues have used that ultrafast camera as a tool to study a chaotic cavity, “in which the light takes a different path every time we repeat the experiment.”

The camera makes use of a technology called compressed ultrafast photography (CUP), which Wang has demonstrated in other research to be capable of speeds as fast as 70 trillion frames per second. The speed at which a CUP camera takes video makes it capable of seeing light–the fastest thing in the universe–as it travels.

But CUP cameras have another feature that make them uniquely suited for studying chaotic systems. Unlike a traditional camera that shoots one frame of video at a time, a CUP camera essentially shoots all of its frames at once. This allows the camera to capture the entirety of a laser beam’s chaotic path through the chamber all in one go.

That matters because in a chaotic system, the behavior is different every time. If the camera only captured part of the action, the behavior that was not recorded could never be studied, because it would never occur in exactly the same way again. It would be like trying to photograph a bird, but with a camera that can only capture one body part at a time; furthermore, every time the bird landed near you, it would be a different species. Although you could try to assemble all your photos into one composite bird image, that cobbled-together bird would have the beak of a crow, the neck of a stork, the wings of a duck, the tail of a hawk, and the legs of a chicken. Not exactly useful.

Wang says that the ability of his CUP camera to capture the chaotic movement of light may breathe new life into the study of optical chaos, which has applications in physics, communications, and cryptography.

“It was a really hot field some time ago, but it’s died down, maybe because we didn’t have the tools we needed,” he says. “The experimentalists lost interest because they couldn’t do the experiments, and the theoreticians lost interest because they couldn’t validate their theories experimentally. This was a fun demonstration to show people in that field that they finally have an experimental tool.”

###

The paper describing the research, titled “Real-time observation and control of optical chaos,” appears in the January 13 issue of Science Advances. Co-authors are Linran Fan, formerly of Caltech, now an assistant professor at Wyant College of Optical Sciences at the University of Arizona; and Xiaodong Yan and Han Wang, of the University of Southern California.

Funding for the research was provided by the Army Research Office Young Investigator Program, the Air Force Office of Scientific Research, the National Science Foundation, and the National Institutes of Health.

Media Contact

Emily Velasco

[email protected]

Original Source

https:/

Related Journal Article

http://dx.