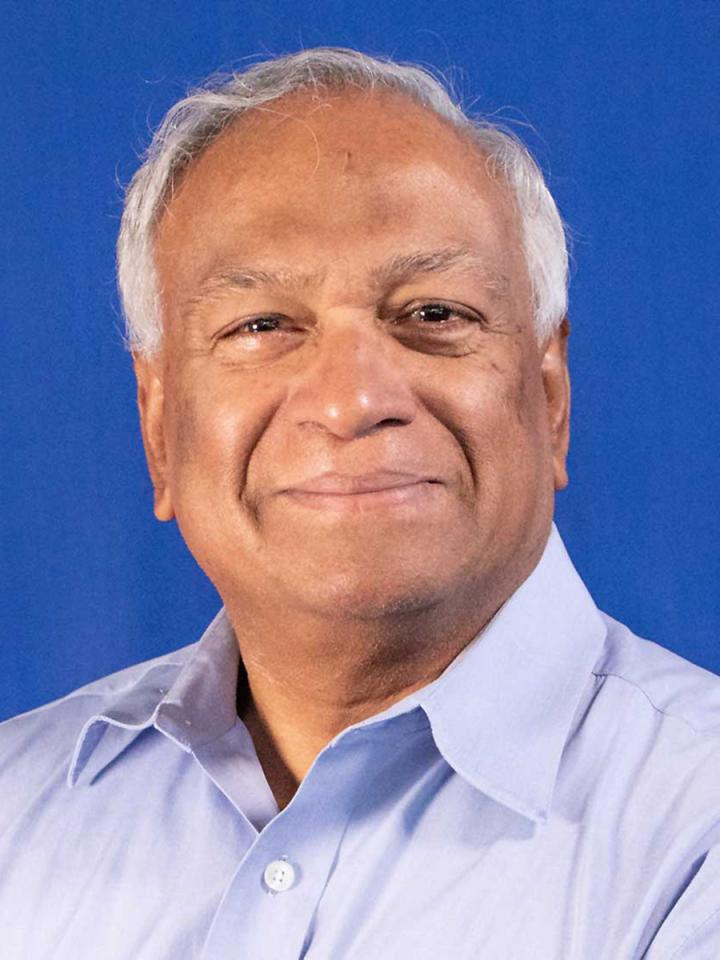

UTA researcher working to make data more usable and accessible

Credit: UT Arlington

A computer science professor at The University of Texas at Arlington is working with researchers to develop a process by which data points in multiple graph layers of a very large dataset can be connected in a way that is both highly scalable and will allow analysts to look at it in greater depth.

Sharma Chakravarthy and his colleagues from the University of North Texas and Penn State University received a three-year, $995,871 grant from the National Science Foundation for the research. UTA’s share is $373,868.

UTA students also are assisting in the research, including doctoral student Abhishek Santra and several master’s students in the computer science program under Chakravarthy’s guidance.

The team is using a process known as network decoupling. Efficient algorithms convert large datasets into individual layers that are connected. With this approach, layers can be chosen arbitrarily for analysis. The analysis results preserve semantics and structure and make it easy to create visualizations of the data and results, enabling analysts to picture how the layers of data fit together with greater ease.

The project comprises three parts:

- modeling to determine how to make the process work;

- finding the most efficient way to analyze the representation produced; and

- representing the data graphically.

“Multi-layer network decoupling allows us to make sense of a huge number of datapoints and look at the data in many different ways,” Chakravarthy said. “It’s not a new model, but our approach to its analysis is new. The results are customizable to the needs of the researcher and are more actionable, tangible and easier to understand. Down the road, we’d like to try fusing different types of data, such as video and audio, with the structured data.”

Chakravarthy and his colleagues have tested their process on several datasets. One is a COVID-19 dataset that looks at the number of cases in every county of the United States on a given day, then again two weeks later. Using their multi-layer approach, each county is a node and can be analyzed by characteristics such as how it compares to adjacent counties in terms of numbers of cases, then modeled as a graph. This can show trends in terms of geography, spread and other useful information.

Another is the location of automobile accidents, where each accident is a node and can then be analyzed by time of day or year, weather and other characteristics to determine potential causes and related safety issues.

A third is the Internet Movie Database, better known as IMDb, where they could look at every actor’s movies and connect them to directors and other actors and see trends such as which groups of actors certain directors cast in their movies and other types of interactions.

“Big data analysis is difficult because of the immense amount of information involved in each dataset and the complex embedded relationships,” said Hong Jiang, chair of UTA’s Computer Science and Engineering Department. “The ability to categorize massive datasets efficiently so the data becomes useful and accessible is very important, and Dr. Chakravarthy and his colleagues have applied a creative framework to an existing model or representation that will yield better, deeper results.”

###

– Written by Jeremy Agor, College of Engineering

Media Contact

Herb Booth

[email protected]

Original Source

https:/