Credit: Carnegie Mellon College of Engineering

When scientists try to predict the spread of something across populations–anything from a coronavirus to misinformation–they use complex mathematical models to do so. Typically, they’ll study the first few steps in which the subject spreads, and use that rate to project how far and wide the spread will go.

But what happens if a pathogen mutates, or information becomes modified, changing the speed at which it spreads? In a new study appearing in this week’s issue of Proceedings of the National Academy of Sciences (PNAS), a team of Carnegie Mellon University researchers show for the first time how important these considerations are.

“These evolutionary changes have a huge impact,” says CyLab faculty member Osman Yagan, an associate research professor in Electrical and Computer Engineering (ECE) and corresponding author of the study. “If you don’t consider the potential changes over time, you will be wrong in predicting the number of people that will get sick or the number of people who are exposed to a piece of information.”

Most people are familiar with epidemics of disease, but information itself–nowadays traveling at lightning speeds over social media–can experience its own kind of epidemic and “go viral.” Whether a piece of information goes viral or not can depend on how the original message is tweaked.

“Some pieces of misinformation are intentional, but some may develop organically when many people sequentially make small changes like a game of ‘telephone,'” says Yagan. “A seemingly boring piece of information can evolve into a viral Tweet, and we need to be able to predict how these things spread.”

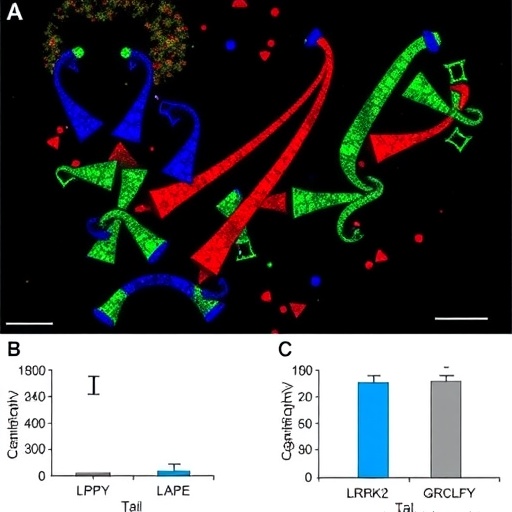

In their study, the researchers developed a mathematical theory that takes these evolutionary changes into consideration. They then tested their theory against thousands of computer-simulated epidemics in real-world networks, such as Twitter for the spread of information or a hospital for the spread of disease.

In the context of spreading of infectious disease, the team ran thousands of simulations using data from two real-world networks: a contact network among students, teachers, and staff at a US high school, and a contact network among staff and patients in a hospital in Lyon, France.

These simulations served as a test bed: the theory that matches what is observed in the simulations would prove to be the more accurate one.

“We showed that our theory works over real-world networks,” says the study’s first author, Rashad Eletreby, who was a Carnegie Mellon Ph.D. student when he wrote the paper. “Traditional models that don’t consider evolutionary adaptations fail at predicting the probability of the emergence of an epidemic.”

While the study isn’t a silver bullet for predicting the spread of today’s coronavirus or the spread of fake news in today’s volatile political environment with 100% accuracy – one would need real-time data tracking the evolution of the pathogen or information to do that – the authors say it’s a big step.

“We’re one step closer to reality,” says Eletreby.

###

Other authors on the study included ECE Ph.D. student Yong Zhuang, Institute for Software Research professor Kathleen Carley, and Princeton Electrical Engineering professor Vincent Poor.

Media Contact

Daniel Tkacik

[email protected]

412-268-1187