An algorithm speeds up the planning process robots use to adjust their grip on objects, for picking and sorting, or tool use

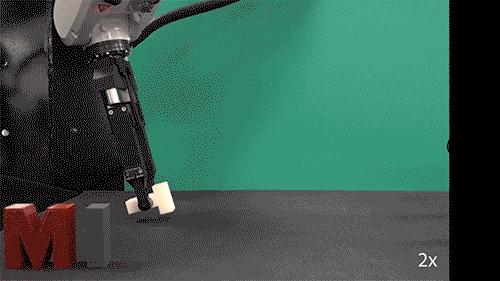

Credit: Image courtesy of Alberto Rodriguez, Nikhil Chavan-Dafle, Rachel Holladay

If you’re at a desk with a pen or pencil handy, try this move: Grab the pen by one end with your thumb and index finger, and push the other end against the desk. Slide your fingers down the pen, then flip it upside down, without letting it drop. Not too hard, right?

But for a robot — say, one that’s sorting through a bin of objects and attempting to get a good grasp on one of them — this is a computationally taxing maneuver. Before even attempting the move it must calculate a litany of properties and probabilities, such as the friction and geometry of the table, the pen, and its two fingers, and how various combinations of these properties interact mechanically, based on fundamental laws of physics.

Now MIT engineers have found a way to significantly speed up the planning process required for a robot to adjust its grasp on an object by pushing that object against a stationary surface. Whereas traditional algorithms would require tens of minutes for planning out a sequence of motions, the new team’s approach shaves this preplanning process down to less than a second.

Alberto Rodriguez, associate professor of mechanical engineering at MIT, says the speedier planning process will enable robots, particularly in industrial settings, to quickly figure out how to push against, slide along, or otherwise use features in their environments to reposition objects in their grasp. Such nimble manipulation is useful for any tasks that involve picking and sorting, and even intricate tool use.

“This is a way to extend the dexterity of even simple robotic grippers, because at the end of the day, the environment is something every robot has around it,” Rodriguez says.

The team’s results are published today in The International Journal of Robotics Research. Rodriguez’ co-authors are lead author Nikhil Chavan-Dafle, a graduate student in mechanical engineering, and Rachel Holladay, a graduate student in electrical engineering and computer science.

Physics in a cone

Rodriguez’ group works on enabling robots to leverage their environment to help them accomplish physical tasks, such as picking and sorting objects in a bin.

Existing algorithms typically take hours to preplan a sequence of motions for a robotic gripper, mainly because, for every motion that it considers, the algorithm must first calculate whether that motion would satisfy a number of physical laws, such as Newton’s laws of motion and Coulomb’s law describing frictional forces between objects.

“It’s a tedious computational process to integrate all those laws, to consider all possible motions the robot can do, and to choose a useful one among those,” Rodriguez says.

He and his colleagues found a compact way to solve the physics of these manipulations, in advance of deciding how the robot’s hand should move. They did so by using “motion cones,” which are essentially visual, cone-shaped maps of friction.

The inside of the cone depicts all the pushing motions that could be applied to an object in a specific location, while satisfying the fundamental laws of physics and enabling the robot to keep hold of the object. The space outside of the cone represents all the pushes that would in some way cause an object to slip out of the robot’s grasp.

“Seemingly simple variations, such as how hard robot grasps the object, can significantly change how the object moves in the grasp when pushed,” Holladay explains. “Based on how hard you’re grasping, there will be a different motion. And that’s part of the physical reasoning that the algorithm handles.”

The team’s algorithm calculates a motion cone for different possible configurations between a robotic gripper, an object that it is holding, and the environment against which it is pushing, in order to select and sequence different feasible pushes to reposition the object.

“It’s a complicated process but still much faster than the traditional method — fast enough that planning an entire series of pushes takes half a second,” Holladay says.

Big plans

The researchers tested the new algorithm on a physical setup with a three-way interaction, in which a simple robotic gripper was holding a T-shaped block and pushing against a vertical bar. They used multiple starting configurations, with the robot gripping the block at a particular position and pushing it against the bar from a certain angle. For each starting configuration, the algorithm instantly generated the map of all the possible forces that the robot could apply and the position of the block that would result.

“We did several thousand pushes to verify our model correctly predicts what happens in the real world,” Holladay says. “If we apply a push that’s inside the cone, the grasped object should remain under control. If it’s outside, the object should slip from the grasp.”

The researchers found that the algorithm’s predictions reliably matched the physical outcome in the lab, planning out sequences of motions — such as reorienting the block against the bar before setting it down on a table in an upright position — in less than a second, compared with traditional algorithms that take over 500 seconds to plan out.

“Because we have this compact representation of the mechanics of this three-way-interaction between robot, object, and their environment, we can now attack bigger planning problems,” Rodriguez says.

The group is hoping to apply and extend its approach to enable a robotic gripper to handle different types of tools, for instance in a manufacturing setting.

“Most factory robots that use tools have a specially designed hand, so instead of having the ability to grasp a screwdriver and use it in a lot of different ways, they just make the hand a screwdriver,” Holladay says. “You can imagine that requires less dexterous planning, but it’s much more limiting. We’d like a robot to be able to use and pick lots of different things up.”

###

This research was supported, in part, by Mathworks, the MIT-HKUST Alliance, and the National Science Foundation.

Written by Jennifer Chu, MIT News Office

Related links

Paper: “Planar in-hand manipulation via motion cones.”

https:/

Video: “In-hand manipulation via motion cones”

https:/

MIT robot combines vision and touch to learn the game of Jenga

http://news.

Robo-picker grasps and packs

http://news.

Giving robots a more nimble grasp

http://news.

Media Contact

Abby Abazorius

[email protected]

617-253-2709

Original Source

http://news.

Related Journal Article

http://dx.